Should your company name its AI agents or keep them anonymous? It sounds like a branding decision, but it’s quickly becoming a strategic one.

AI agents are taking over critical workflows in most industries—and how people interact with them now determines whether those systems earn trust, drive adoption, and deliver real performance gains.

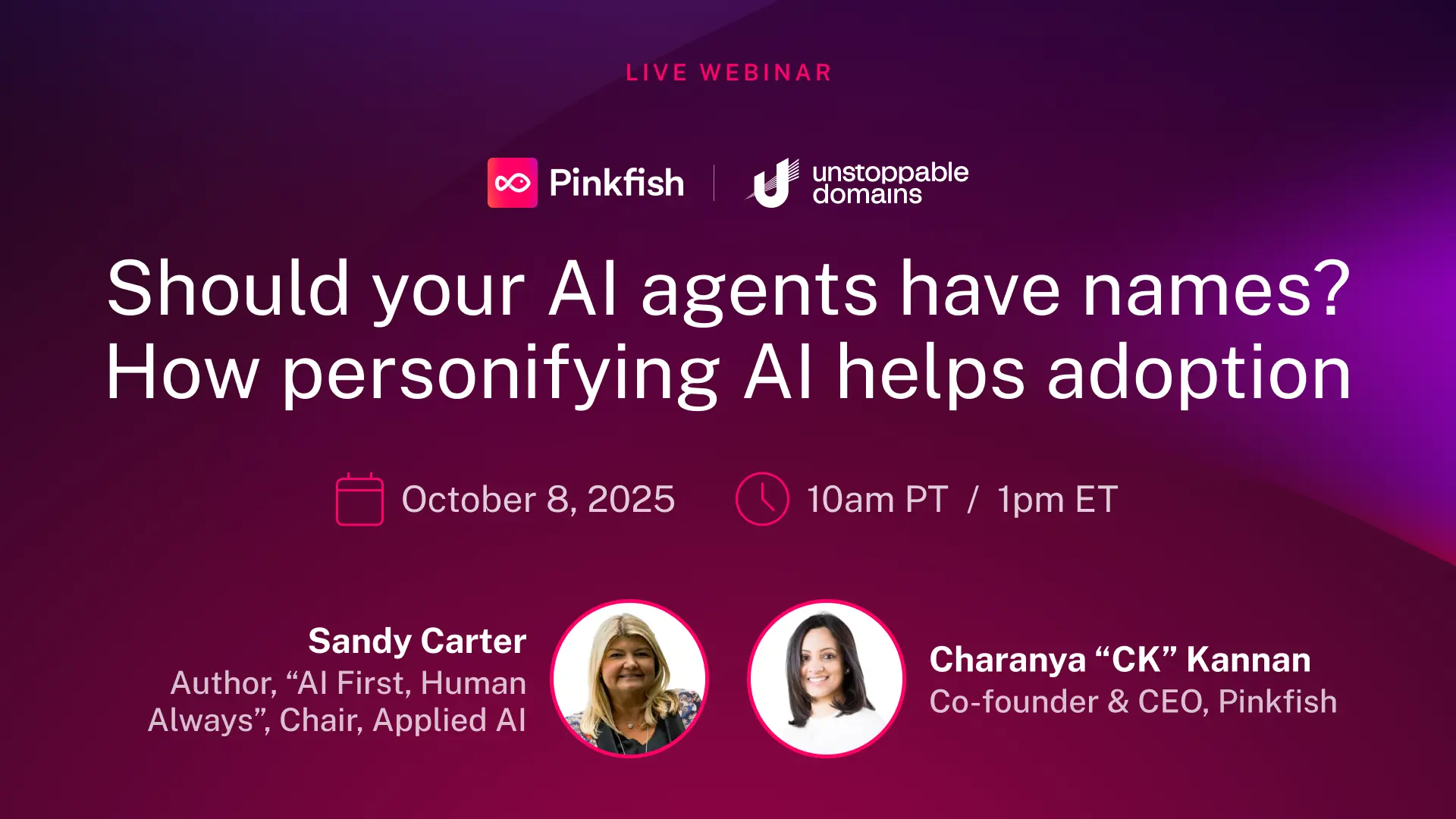

This was the central theme of Pinkfish’s latest webinar, “Should your AI agents have names?”, where Sandy Carter, CBO and Head of GTM at Unstoppable Domains, joined Charanya “CK” Kannan, CEO and Co-Founder of Pinkfish, to explore the psychology, design, and real-world consequences of personifying AI.

For enterprise leaders investing millions into automation, it’s no longer enough for an AI system to “work”. It has to be accepted.

Teams that see AI as a faceless, unpredictable tool are hesitate to rely on it. Those that see it as a reliable teammate—one with a name, purpose, and predictable behavior—integrate it faster, use it more effectively, and uncover ROI sooner.

That’s the human gap Pinkfish is helping companies close. And that’s why we used the webinar to tackle questions every AI-driven enterprise is wrestling with today:

It’s a topic with tangible stakes. Enterprises everywhere are investing in AI tools, yet some struggle with adoption or confusion over what AI can or can’t do.

Sandy and CK brought clarity to that tension, showing that AI adoption is as much a cultural rollout as it is a technical one.

One of the key ideas came early on—and hit a nerve with every enterprise leader listening. Why do we even feel compelled to give AI agents in the first place?

Sandy Carter explained that the answer lies in a deeply human instinct called anthropomorphism—our tendency to project human traits onto non-human entities.

“If you do name an agent, then there are certain things that link the value and the trust, because we trust humans so much more.”

She added that motives matter. If naming is used to deceive, it breaks trust. But when done transparently, it can help users see the AI as a credible teammate rather than an invisible algorithm:

“If you’re trying to generate trust, like you’re creating an AI org chart… then I think it can have a really positive impact”, Sandy explained the views on this thin line every enterprise faces.

Naming changes behavior. In rollouts Sandy has seen, a well-chosen name speeds first use, reduces hesitation in daily tasks, and sets clear expectations for what the agent does:

“Naming AI agents can absolutely accelerate the adoption, but only under the right circumstances.”

Where it helps first is visibility. In teams that use an agent every day, a name becomes a natural shortcut. People say “Ask Copilot” instead of “open the summarization tool”, which normalizes the habit and removes small moments of friction that slow adoption.

It also lowers anxiety when the name fits the culture and the stakes. In an organization, Sandy advised, a calm, gender-neutral name for an agent made the employees more willing to try it. The tone signaled “steady and serious”, which helped skeptical teams cross the first-use barriers.

Clarity is the third lever. Function-signaling names teach correct usage and set boundaries, helping cut off-label requests and keep accountability correct.

Backfires happen for predictable reasons. Overpromising is the fastest way to lose trust: names that imply omniscience—Sage, Genius, even God—inflate expectations the system can’t meet. As Sandy warned: “Trust evaporates really fast when you try to do something like that.”

The discussion also revolved around a simple truth: a name can invite people in, but trust comes from behavior—and behavior comes from design.

Sandy and CK kept returning to the same point: reliable agents are the outcome of clear scope, tone, and human oversight.

Early on, Sandy grounded the idea in accountability. Teams can move fast on low-risk tasks, but lines must be explicit when dollar amounts or risk rise. That framing turns guardrails into day-to-day practice: automate what’s safe, escalate what isn’t, and make the thresholds visible to everyone.

Scope drift was the other recurring theme. Sandy shared her daughter’s fashion agent that started talking about cars after a few odd prompts, until they reset the boundary: “You’re a fashion agent. You’re not a car agent.”

Without boundaries, AI agents can wander. With them, they become predictably useful.

The takeaway for leaders was pragmatic: design guardrails that people can see and understand—what the agent does, where it stops, how it sounds, and who signs off when stakes are high.

Some of the most creative examples shared in the webinar showed how companies use naming to reflect brand DNA and team ownership.

Sandy cited a marketing company that named all of its AI agents after characters from a TV show the team voted on, complete with an org chart where AI agents appeared alongside their human teammates.

Another example came from Bank of America, whose financial assistant Erica now serves millions.

“She has 38 million users today. And what her goal is to help you understand your money. She doesn’t make a financial decision for you.”

The trend is clear: enterprises are realizing that AI agents need their own identity architecture, one that signals function, culture, and tone while staying aligned with governance and accountability.

Give an agent a name only when you can back it with a working relationship that people can see. That was one of the key points in this webinar: identity paired with ownership, scope, and a visible path to escalation.

Sandy set the accountability bar: “When you engage with an AI agent, it is you who has the final say.” CK underscored that the product must explain scope on contact, every time it’s needed.

Treat naming as an adoption signal. If the agent’s role, limits, approvals, and voice surface at the moment of use, the name accelerates first use, correct use, and measurable performance. If those signals aren’t present, the name slows you down.

Watch the webinar recording to get all the insights you need to decide whether you should name your AI agents (and how).

See how Pinkfish’s built-in guardrails and enterprise-grade governance turn AI agents into systems teams actually adopt—book a demo.