Skip the training loops. Let your LLMs evolve with every use.

.png)

There's a lot of buzz about reinforcement learning as the path to self-improving AI systems. And while RL has produced impressive results - it's how frontier models from OpenAI, Anthropic, and Google are trained- it's fundamentally misaligned with how most developers and companies actually build with LLMs today. The reality is that the vast majority of organizations don't train their own models, they consume them as a service from these providers. This creates three critical barriers to adopting RL:

First, you need access to model weights or specialized infrastructure. Traditional RL approaches like RLHF require direct access to model weights for training. While some API providers offer fine-tuning services and there are prompt-level RL techniques, these still demand substantial ML expertise and dedicated infrastructure.

Second, RL locks you into a specific model version. Whether you're doing full RLHF or API-based fine-tuning, you own and maintain that model snapshot. The strength of the current LLM ecosystem is the rapid pace of improvement - new models from Anthropic, OpenAI, and Google arrive every few months, each bringing substantial capability gains. Organizations that can quickly migrate to the latest models maintain a competitive edge, while those locked into custom-trained versions fall behind as the frontier advances.

Third, RL requires specialized expertise that most organizations don't have and can't justify hiring. Setting up reward models, managing training infrastructure, and debugging RL pipelines demands a team of ML engineers and researchers - a significant investment that only makes sense at scale.

But that doesn't mean we don't want our systems to improve based on usage and feedback. The promise of the AI era is building systems that aren't static, that learn from mistakes and get better over time. We just need an approach that works within the constraints of the API-first, model-agnostic world most of us operate in. Enter Agentic Context Engineering (ACE) - a technique that delivers the benefits of learning from experience without the overhead, lock-in, or expertise requirements of RL. It's the perfect Goldilocks solution: sophisticated enough to drive real improvement, practical enough to implement with a small team, and portable enough to work across any LLM provider.

In a typical LLM system, context is the information you provide alongside your query to help the model understand what you're asking and how to respond. Think of it as setting the stage before the performance begins. This context usually includes several components: a system prompt that defines the assistant's role and capabilities ("You are a helpful coding assistant"), the current user message, relevant chat history from the conversation, and sometimes additional information like retrieved documents or API schemas.

Most production LLM applications use what's called a "context generator" - a piece of code that dynamically assembles these components into the final prompt sent to the model. For example, when you ask a question in a chatbot, the context generator might concatenate: the base system instructions, the last 10 messages from your conversation history, any relevant knowledge base articles, and finally your new question. The exact composition varies based on the task at hand, a code generation system might inject API documentation, while a customer service bot might include company policies.

Most companies already have a system like this in place; it's the de facto standard for building an LLM powered service. And if you're working at a company that doesn't and you're just beginning your journey, this is where you should start.

This approach is technically called in-context learning (ICL), and it's powerful because you can change the model's behavior without any training - you're just providing information at inference time. However, traditional ICL has a key limitation: the context resets between sessions. Each new conversation starts fresh with the same base prompt. If the model makes a mistake in one session, there's no mechanism for that learning to carry forward. You might have a conversation today where your LLM-Excel-Copilot learns that your company's Excel exports always need specific formatting, but tomorrow it will have forgotten that insight entirely. The context evolves dynamically within a single session as messages accumulate, but there's no memory bridge between sessions, no way for the system to get systematically better based on what it learned from previous interactions.

A note on memory systems: Some LLM providers (like Claude and ChatGPT) now offer memory features that persist facts across sessions -remembering your preferences, past conversations, and personal context. These help bridge the session gap for individual users, capturing things like "Alex prefers dark mode charts" or "always include units in calculations." However, these memory systems require explicit user management - you need to actively review, add, and remove memories, making them a manual process rather than automatic improvement. They're also inherently personal and conversational—they remember facts about you and your past interactions, but they don't systematically extract generalizable lessons that improve the system's core capabilities. If the model struggles with a specific API pattern, personal memory might help that one user in future conversations (if they manually add it to memory), but it won't help other users facing the same issue.

Recently, Stanford researchers published a paper that formalized and benchmarked a technique many practitioners have been experimenting with: Agentic Context Engineering (ACE). While traditional in-context learning resets between sessions, ACE introduces a crucial innovation - persistent and automatic learning across sessions. Instead of starting fresh each time, ACE systems maintain and evolve a "playbook" of strategies, insights, and lessons learned that carries forward from one interaction to the next.

The approach sits in an interesting middle ground between static prompting and full reinforcement learning. Like RL, ACE involves learning as a function of feedback and reinforcement - the system observes what works and what doesn't, then adjusts accordingly. But unlike RL, it doesn't require model training, weight updates, or any of the infrastructure overhead that makes RL impractical for most organizations. Instead, ACE operates purely at the context level, systematically capturing and organizing knowledge that improves future performance.

The key insight from the Stanford paper is captured in this line: "Rather than compressing contexts into distilled summaries, ACE treats them as evolving playbooks that accumulate and organize strategies over time." This is a crucial distinction. Many earlier attempts at cross-session learning tried to create concise summaries "here's what we learned, compressed into three bullet points." But compression inevitably loses detail, and those details often matter. ACE takes the opposite approach: it embraces comprehensive, detailed playbooks that grow richer over time, trusting modern long-context models to find and apply the relevant pieces when needed. The playbook isn't just documentation—it's an accumulating knowledge base of domain-specific heuristics, common pitfalls, successful patterns, and edge cases discovered through actual usage.

The Stanford paper proposes a specific technique to manage the playbook through an agentic architecture with three specialized components:

The Three-Role System:

Key Innovation: Incremental Delta Updates

Rather than regenerating contexts in full (which leads to "context collapse"), ACE represents contexts as collections of structured, itemized bullets. Each bullet consists of:

The system produces compact "delta contexts" - small sets of candidate bullets distilled by the Reflector and integrated by the Curator. This avoids the computational cost and latency of full rewrites while ensuring past knowledge is preserved and new insights are steadily appended.

Grow-and-Refine Mechanism

To prevent unbounded growth, ACE employs a "grow-and-refine" approach where bullets with new identifiers are appended while existing bullets are updated in place (e.g., incrementing counters). A de-duplication step then prunes redundancy by comparing bullets via semantic embeddings. This refinement can be performed proactively (after each delta) or lazily (only when the context window is exceeded).

Multi-Epoch Adaptation

ACE supports revisiting the same queries multiple times to progressively strengthen the context, allowing the playbook to evolve through multiple passes over training data.

This structured approach prevents the two major pitfalls identified in the paper: brevity bias (collapsing into generic summaries) and context collapse (dramatic information loss during rewrites), while enabling contexts that scale with long-context models and accumulate comprehensive domain knowledge over time.

Claude recently released "Skills," a feature that shares conceptual DNA with ACE but takes a different approach. Skills are specialized folders containing instructions, scripts, and resources that Claude can dynamically load when relevant to a task. Like ACE's playbooks, Skills provide Claude with domain-specific expertise—whether that's working with Excel spreadsheets, following brand guidelines, or executing custom workflows. The key difference is in adaptability: while ACE playbooks evolve through experience, learning from successes and failures to incrementally improve over time, Claude Skills are static packages of expertise that remain unchanged unless manually updated by their creators.

Skills work by having Claude scan available skill folders and load only the minimal information needed for the current task, keeping the system fast while accessing specialized knowledge. They're composable (multiple skills can work together), portable (the same skill works across Claude apps, Claude Code, and API), and can include executable code for tasks where traditional programming is more reliable than token generation. When you use Skills, you'll even see them appear in Claude's chain of thought as it works through problems.

The relationship between ACE and Skills is complementary: Skills provide the initial structured expertise (like a well-crafted starting playbook), while an ACE-like system could theoretically sit on top, observing which skills succeed or fail in practice and proposing updates to make them better over time.

We've been practicing ACE for over a year at Pinkfish, long before the Stanford paper gave it a formal name. Our core product is a coding agent that generates workflow automation code, and it does the following:

These skills are exactly what the Stanford paper calls "playbooks"—they encode all the tips, tricks, edge cases, and domain knowledge needed to generate runnable code.

Our ACE journey started manually. We'd observe failures, diagnose the root cause, and update the relevant skill playbook. Over time, these playbooks grew rich with the little edge cases you only discover through real-world usage.

Take OneDrive as an example. You can search for files by name. However, downloading, copying, and deleting requires the file's unique ID. Without guidance, the LLM will naively generate code that tries to download a file by name (since it works for other functions). But it fails of course. The playbook captures this lesson: "Always retrieve the item ID first via search or list, then use that ID for downloading, copying, and deleting." With this encoded, the system generates correct code on the first try instead of erroring out.

We recently migrated to an agentic MCP-based architecture where the LLM operates in a loop: call a tool, observe the result, decide the next action. This means the agent can recover from errors by trying alternative approaches based on what it learns in real-time. You might think this eliminates the need for detailed skills—after all, can't the agent just figure it out through trial and error?

Not quite. Here's what happens without the skill guidance:

Without Playbook Knowledge (4 steps, ~20 seconds):

With Playbook Knowledge (2 steps, immediate):

Yes, the agent eventually recovers—but it makes the same mistake every single time, wasting tokens and adding latency. The playbook compresses this learned experience into an upfront instruction that prevents the error from happening in the first place. That's 20 seconds saved, fewer tokens consumed, and a more reliable user experience.

And this compounds: with dozens of tools and hundreds of potential workflow patterns, the difference between trial-and-error recovery and informed execution becomes substantial.

You might be thinking: why have separate skills at all? Can't we just put all this guidance directly in the MCP tool descriptions or parameter schemas?

The problem is that tool schemas describe what tools do, not how to use them effectively. A tool schema might say "onedrive_download_file downloads a file given its itemId" but it can't capture:

Tool descriptions need to stay concise for effective tool selection. When LLMs are deciding which of 50+ tools to use, verbose descriptions create noise. Parameter schemas should describe parameters—their types, constraints, and purpose—not encode complex usage patterns.

Skills solve this by providing contextual expertise that lives outside the tool definitions. They're loaded when relevant, giving the agent detailed knowledge about how to successfully accomplish tasks without polluting the tool selection process. Think of tool schemas as the API reference documentation, and skills as the comprehensive implementation guide with examples, best practices, and gotchas learned from production usage.

Of course, manually reviewing every interaction and updating skill files doesn't scale. As your system handles hundreds of thousands of requests daily, you need automation. This is where the systematic approach described in the Stanford paper becomes essential: to automatically identify failures, extract lessons, and incrementally update playbooks without human intervention.

We're building an automated skill update pipeline based on the ACE framework that triggers on three primary signals:

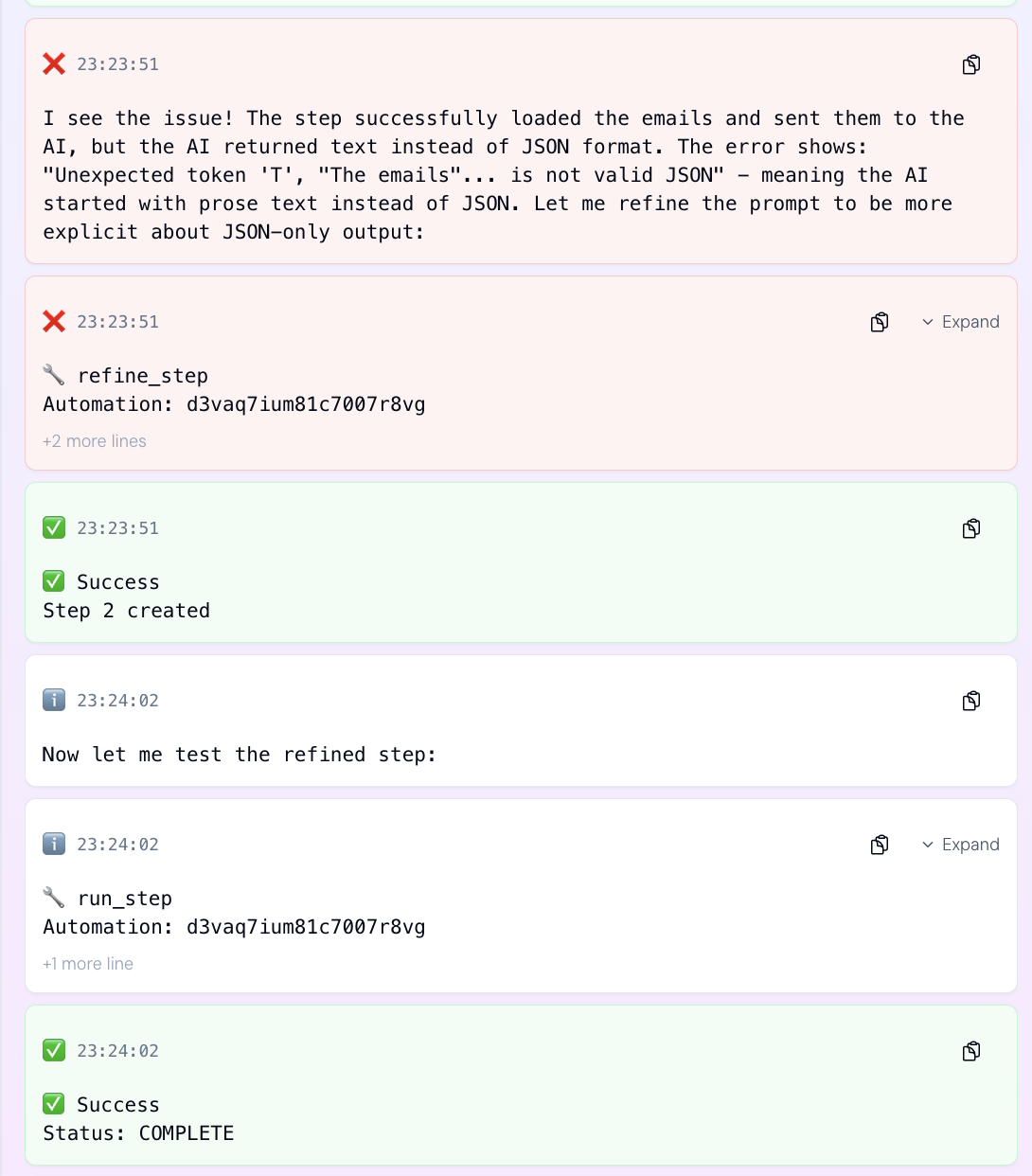

Signal 1: Fail-Repair-Success Loop

When code fails and the user either manually fixes it or uses our "let me fix it for you" tool, we capture the complete trajectory: the original generated code, the error message, and the corrected version. This is a goldmine of learning. We know exactly what went wrong and what the fix should have been.

Signal 2: User Thumbs Down

When a user explicitly signals dissatisfaction with a generated result, even if it technically executed successfully. This catches cases where the code runs but doesn't match user intent or best practices.

Signal 3: Skill Performance Monitoring

We continuously track success rates for each skill. When a skill's failure rate exceeds a threshold (e.g., >15% failures), it's automatically flagged for review and potential updating. This catches systemic issues that might not trigger the other signals. Perhaps an API changed, or the skill's instructions have become outdated.

When we detect these patterns, they flow into our skill update pipeline following the ACE architecture:

Step 1: Generator

In the ACE framework, the Generator is the component that produces reasoning trajectories while solving tasks. At Pinkfish, our Generator is our production code generation system, which has been running for quite some time. As it generates workflow automation code for users, it produces complete reasoning trajectories: the thought process, the code it generates, the APIs it calls, the execution results, and any recovery attempts in response to errors. All of these trajectories are logged during normal operation.

Step 2: Reflector

The Reflector is where we analyze trajectories to extract insights, but this happens in two stages:

First, log analysis and aggregation identifies the key moments worth reflecting on. When our monitoring detects one of the three failure signals (fail-repair-success loop, user thumbs down, or elevated skill failure rate), we extract the relevant trajectories from our production logs. This aggregation layer filters thousands of trajectories down to the specific failures that contain learning opportunities, grouping related failures and pairing them with successful corrections when available.

Second, LLM-based reflection analyzes these extracted trajectories to identify what went wrong and extract concrete insights. The Reflector compares failed attempts with successful corrections or examines error patterns across multiple attempts. Its job is diagnosis and insight extraction. It answers questions like:

The Reflector can optionally refine these insights across multiple iterations, strengthening the quality of extracted lessons. Importantly, it also evaluates which existing playbook bullets were helpful or harmful for each attempt, providing feedback that guides curation.

Step 3: Curator with Classification

The Curator receives the Reflector's insights and synthesizes them into structured delta updates. Before generating updates, our Curator adds a classification step to determine the appropriate scope:

The Curator then generates compact delta entries. These are either new bullets with unique IDs or updates to existing bullets (incrementing their helpful/harmful counters). It merges them into the appropriate playbook level (global, org-level, or user-level). This preserves existing knowledge while adding new insights.

Unlike monolithic context rewriting, these delta updates are:

Step 4: Grow-and-Refine

As deltas accumulate, the playbook grows. To prevent unbounded expansion and redundancy, we apply the grow-and-refine mechanism:

Step 5: Review & Deployment

Initially, all updates go through a manual approval cycle where we review proposed changes before they go live. As we build confidence in the system's judgment, we'll gradually automate approval for high-confidence, low-risk updates while keeping human review for significant changes to core skills.

This tiered approach gives us the best of both worlds: rapid learning from collective experience at the global level, while still accommodating organization-specific practices and individual preferences without polluting the shared knowledge base.

.png)

Agentic Context Engineering represents a pragmatic path to self-improving AI systems that works within the constraints most of us face: API-only access to models, the need to stay current with rapid model improvements, and limited ML expertise. By treating context as an evolving playbook rather than a static prompt, we can build systems that learn from every interaction and get systematically better over time—without the overhead of reinforcement learning.

At Pinkfish, we've seen firsthand how this approach transforms system reliability and user experience. Our skills have grown from simple API documentation to comprehensive playbooks encoding hundreds of edge cases and best practices discovered through real-world usage. And as we move from manual curation to automated skill evolution, we're building a system that can scale with our growing user base while maintaining the domain expertise that makes our code generation reliable.

If you're building LLM systems and want them to improve over time, you probably don't need reinforcement learning. You need Agentic Context Engineering.